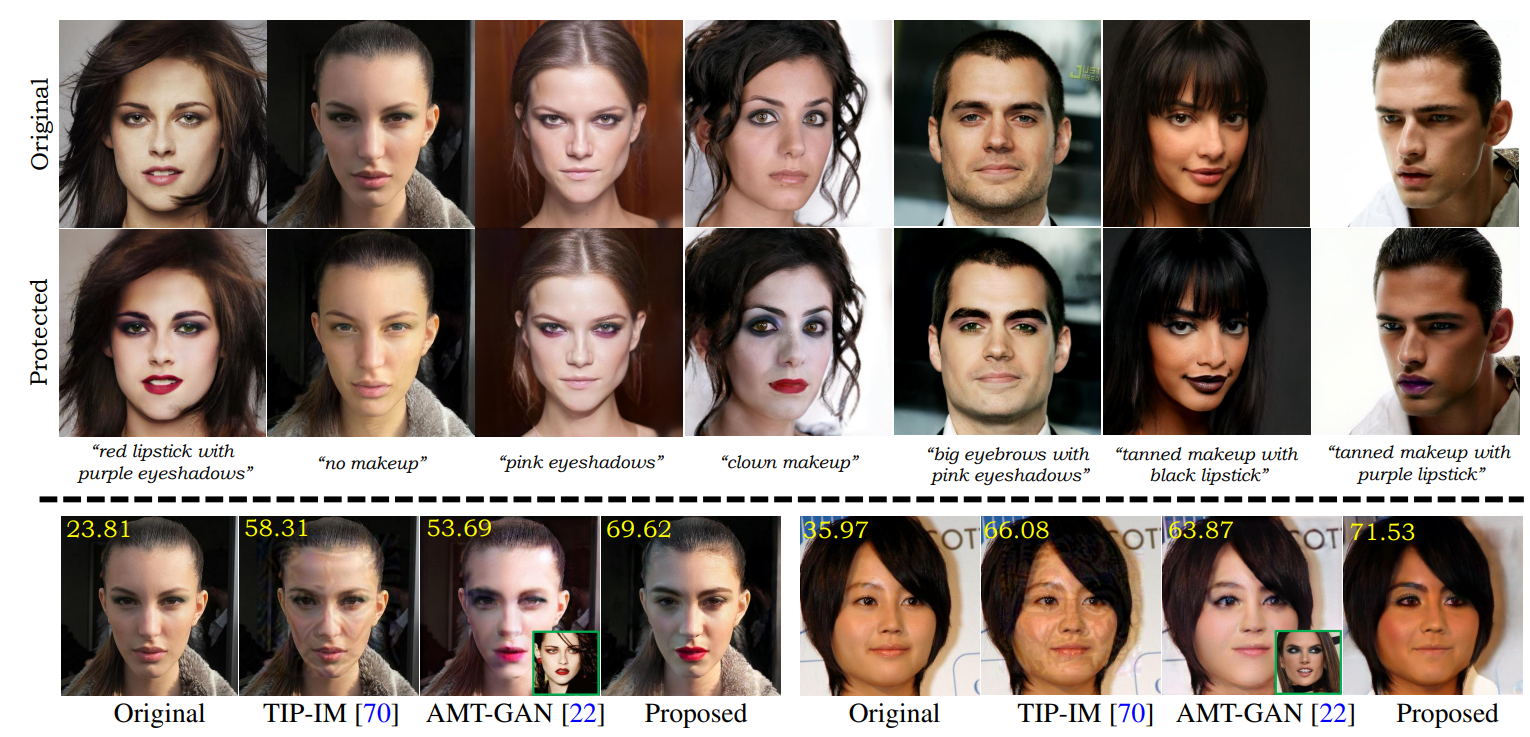

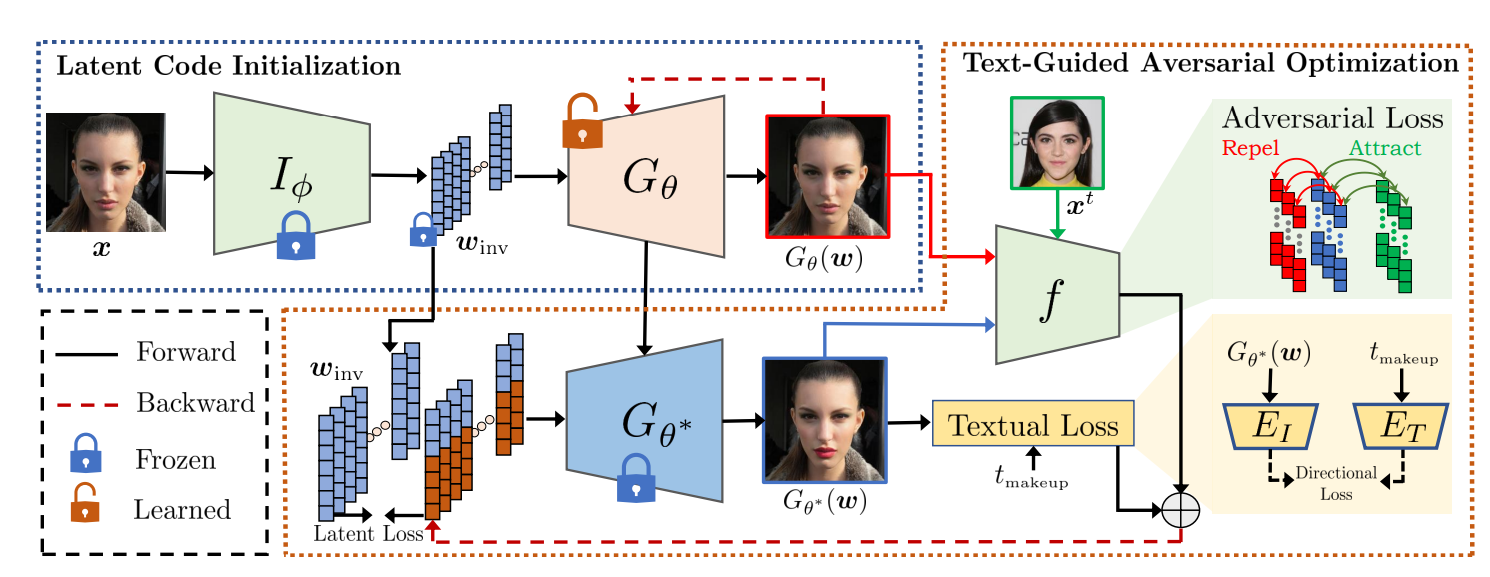

The paper presents a novel approach for facial privacy protection that uses adversarial latent codes in a pretrained generative model’s low-dimensional manifold. The method is designed to generate “naturalistic” images that can protect facial privacy without compromising user experience. The approach uses user-defined makeup text prompts and identity-preserving regularization to guide the search for adversarial codes in the latent space. The results show that the faces generated by this approach have stronger black-box transferability and are effective against commercial face recognition systems.

Publication date: Jun 16, 2023

Project Page: https://github.com/fahadshamshad/Clip2Protect

Paper: https://arxiv.org/pdf/2306.10008.pdf