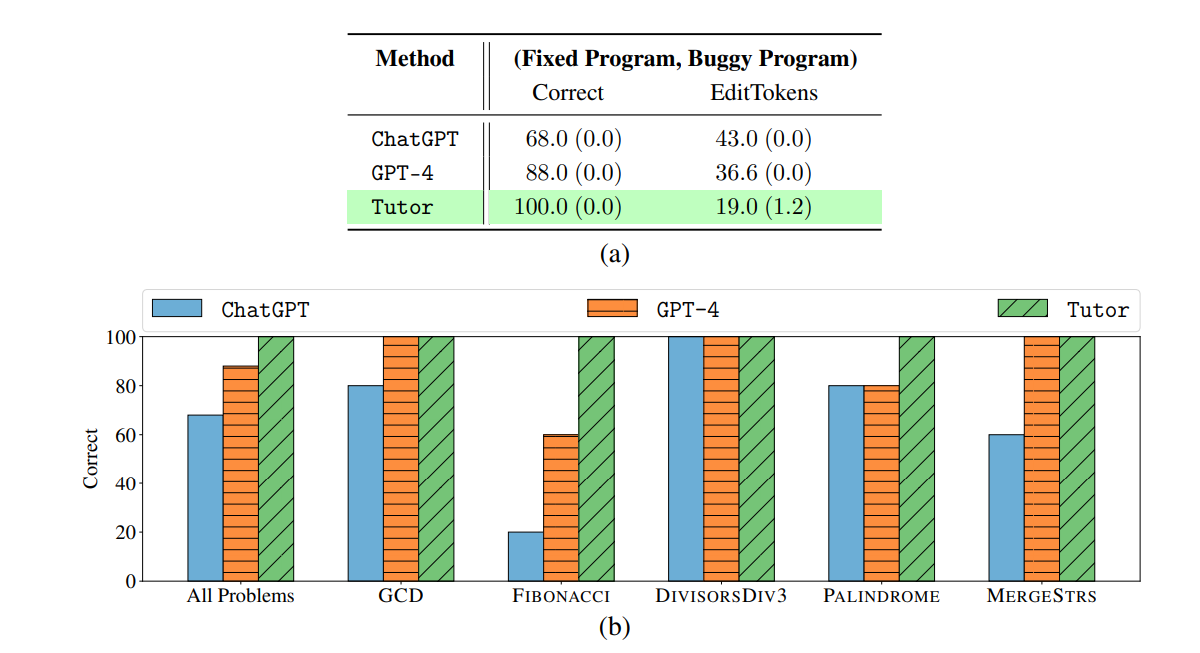

The paper “Generative AI for Programming Education: Benchmarking ChatGPT, GPT-4, and Human Tutors” provides a comprehensive evaluation of two generative AI models, ChatGPT and GPT-4, in the context of programming education. The authors compare these models with human tutors across several scenarios, including program repair, hint generation, grading feedback, pair programming, contextualized explanation, and task creation. The evaluation is conducted using introductory Python programming problems and real-world buggy programs from an online platform.

The results show that GPT-4 significantly outperforms ChatGPT and approaches the performance of human tutors in several scenarios. However, the study also identifies areas where GPT-4 still struggles, such as grading feedback and task creation. These findings suggest exciting future directions for improving the performance of these models in programming education. The study underscores the potential of generative AI models in enhancing computing education and powering next-generation educational technologies.

Publication date: June 29, 2023

Project Page: N/A

Paper: https://arxiv.org/pdf/2306.17156.pdf