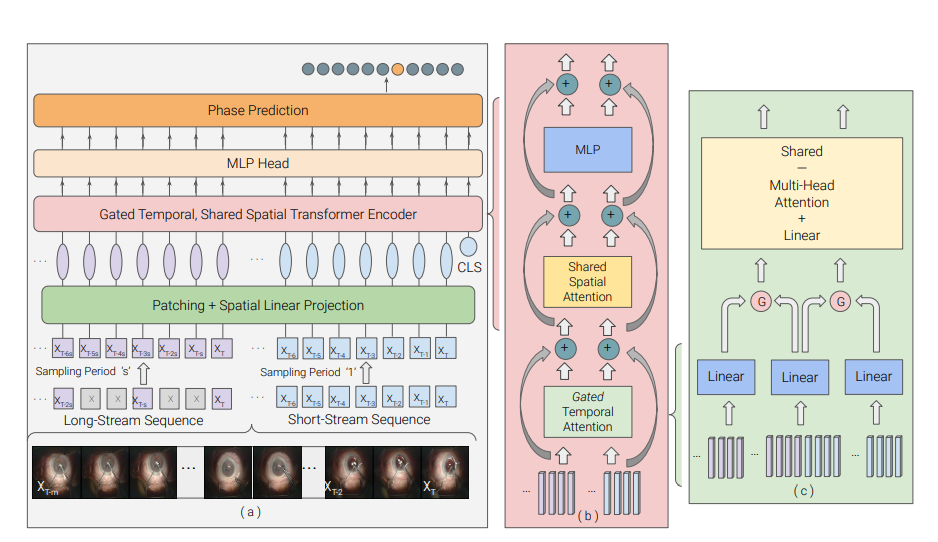

The GLSFormer is a transformer-based model for automated surgical step recognition. It seeks to address limitations of current state-of-the-art methods which either model spatial and temporal information separately or focus on short-range temporal resolution. By incorporating a gated-temporal attention mechanism, it intelligently combines short-term and long-term spatio-temporal feature representations. The method has been extensively evaluated on two cataract surgery video datasets, where it demonstrated superior performance compared to various other methods. This validates the approach’s suitability for surgical step recognition in complex surgical procedures.

Publication date: 20th July 2023

Project Page: https://github.com/nisargshah1999/GLSFormer

Paper: https://arxiv.org/pdf/2307.11081.pdf