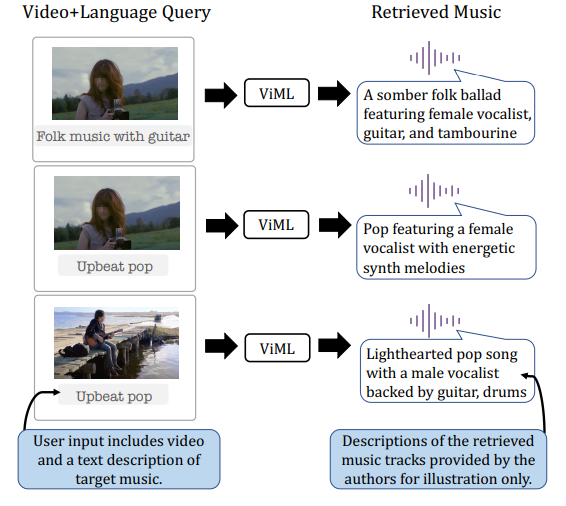

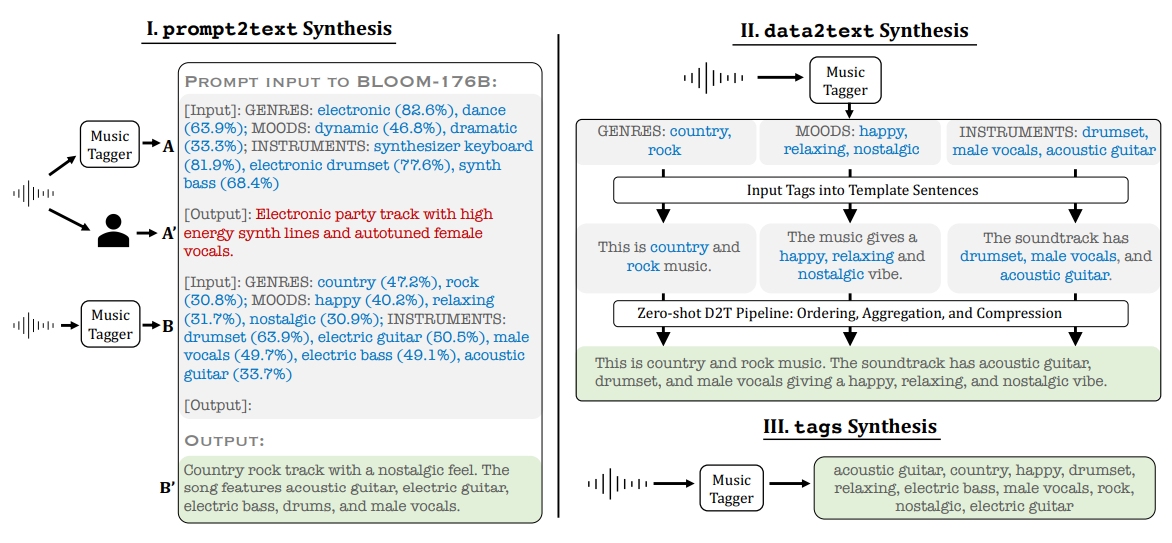

This paper introduces a method to recommend music for a video based on user-guided music selection with free-form natural language. The authors tackle the challenge of existing music video datasets lacking text descriptions of the music. They propose a text-synthesis approach that generates natural language music descriptions from a large-scale language model given pre-trained music tagger outputs and a small number of human text descriptions. The synthesized music descriptions are used to train a new trimodal model, which fuses text and video input representations to query music samples. The model design allows for the retrieved music audio to match the visual style depicted in the video and musical genre, mood, or instrumentation described in the natural language query.

Publication date: June 15, 2023

Project Page: https://www.danielbmckee.com/language-guided-music-for-video

Paper: https://arxiv.org/pdf/2306.09327.pdf