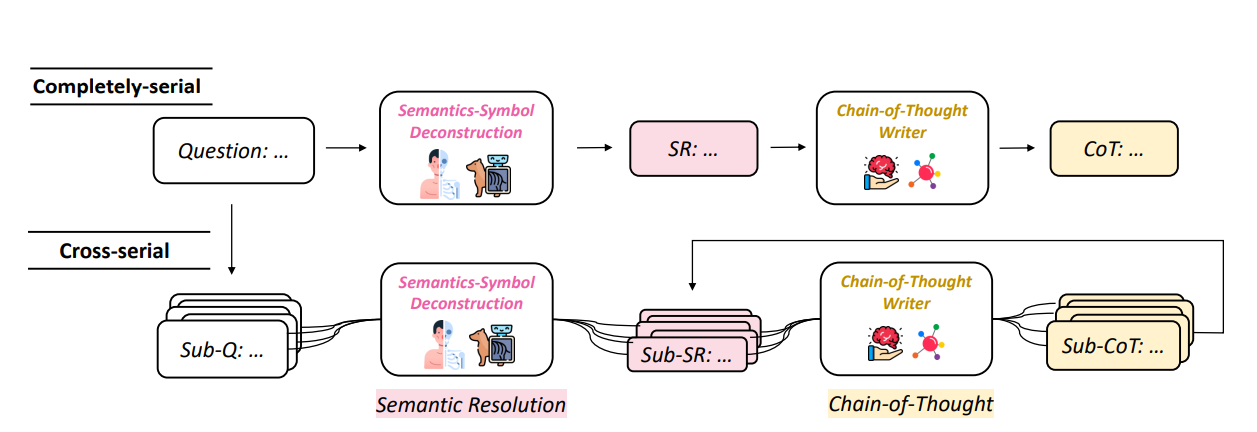

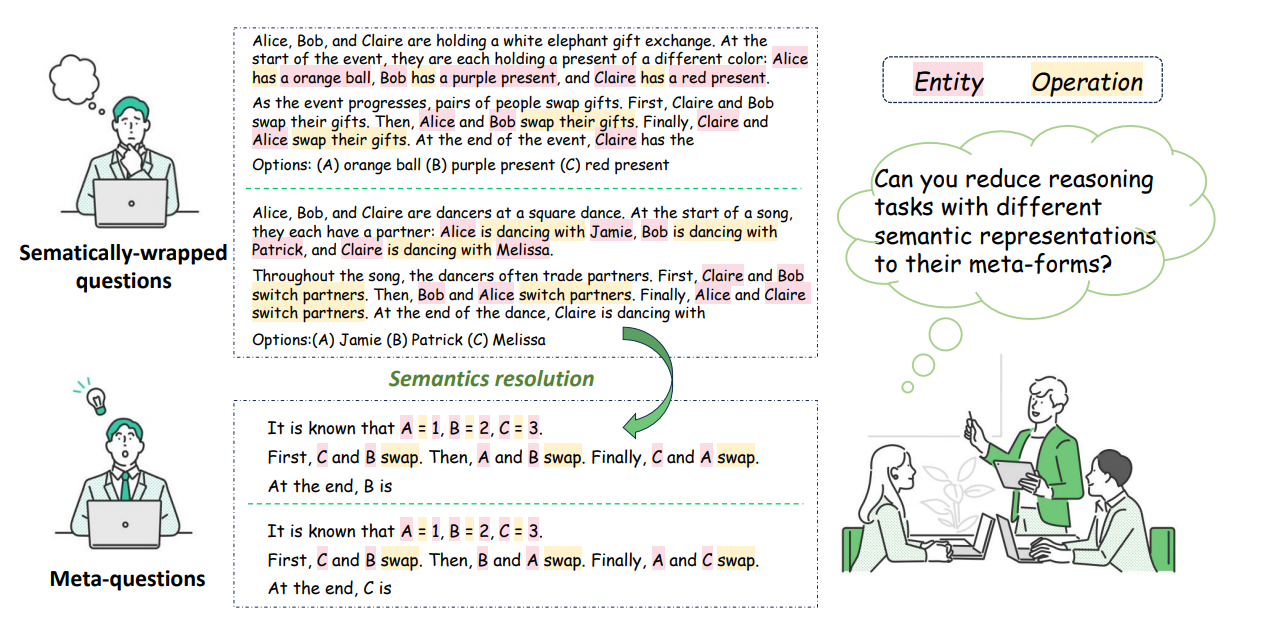

This paper introduces Meta-Reasoning, a novel approach aimed at enhancing the reasoning capabilities of large language models (LLMs). The authors propose a method for semantics-symbol deconstruction, which simplifies natural language by starting from the concept of symbols in linguistics. This allows LLMs to learn the common formulation and general solution of reasoning problems wrapped in different natural semantics. The proposed paradigm enables LLMs to automatically accomplish semantic-symbol deconstruction, thus gaining the ability to learn by analogy and facilitating data-efficient in-context learning. The experiments demonstrate that the Meta-Reasoning paradigm significantly enhances LLMs’ reasoning performance with fewer demonstrations.

Publication date: June 30, 2023

Project Page: N/A

Paper: https://arxiv.org/pdf/2306.17820.pdf