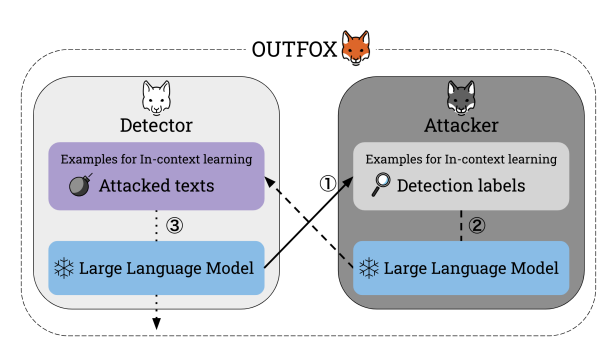

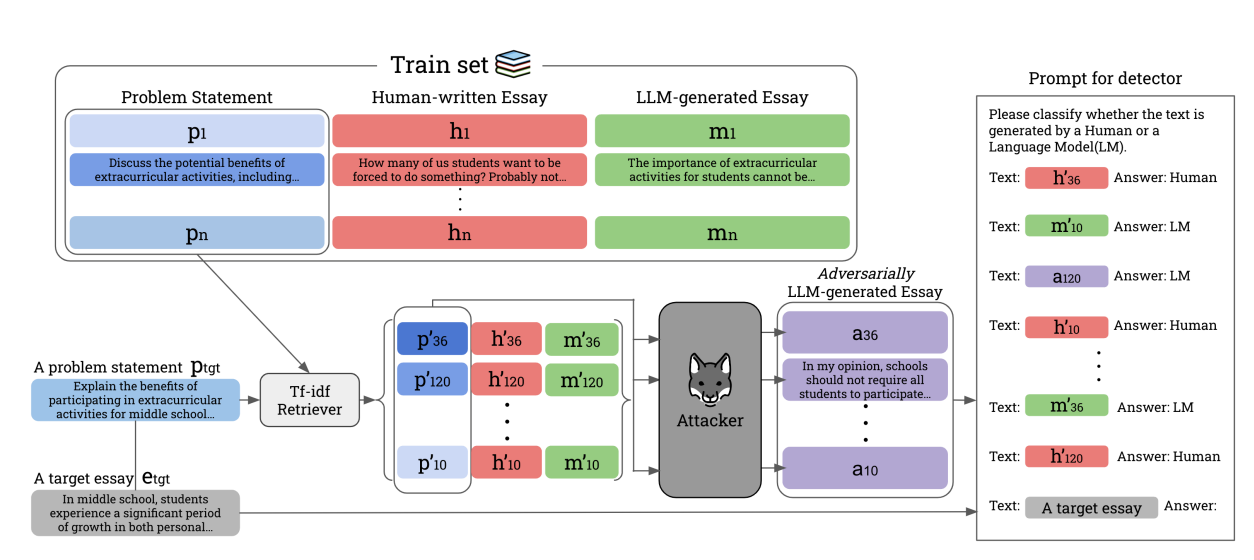

Large Language Models (LLMs) are highly skilled at generating human-like text, creating difficulty in distinguishing between human and LLM-produced writing. This creates a potential for misuse in areas such as education, where students could use LLMs to complete their assignments. Existing detection methods struggle to identify LLM-generated texts, particularly when these texts are paraphrased. The paper proposes a novel framework named OUTFOX to improve the robustness of LLM-generated text detectors. In this system, the detector and the attacker learn from each other’s output, creating a more challenging environment for identifying generated essays.

Publication date: July 21, 2023

Project Page: Not Provided

Paper: https://arxiv.org/pdf/2307.11729.pdf