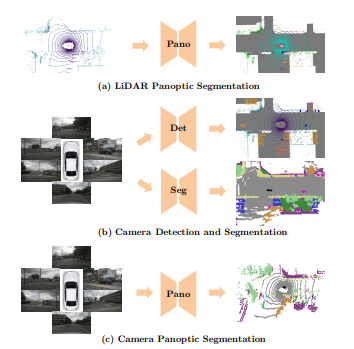

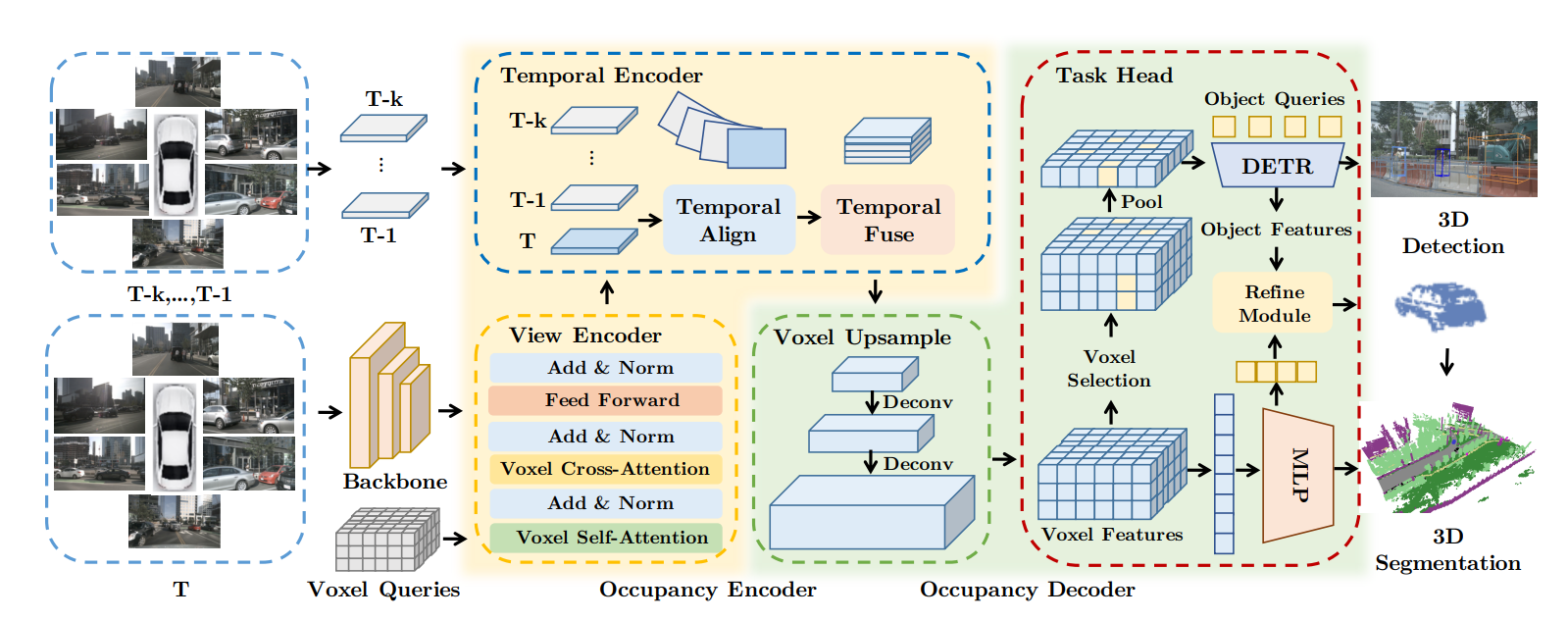

The paper introdces a novel method called PanoOcc, which aims to provide a comprehensive model of the surrounding 3D world, a key requirement for autonomous driving. Existing perception tasks like object detection, road structure segmentation, depth & elevation estimation, and open-set object localization each only focus on a small facet of the holistic 3D scene understanding task. This divide-and-conquer strategy simplifies the algorithm development procedure at the cost of losing an end-to-end unified solution to the problem. PanoOcc addresses this limitation by studying camera-based 3D panoptic segmentation, aiming to achieve a unified occupancy representation for camera-only 3D scene understanding. The method utilizes voxel queries to aggregate spatiotemporal information from multi-frame and multi-view images in a coarse-to-fine scheme, integrating feature learning and scene representation into a unified occupancy representation.

Publication date: June 16, 2023

Project Page: https://github.com/Robertwyq/PanoOcc

Paper: https://arxiv.org/pdf/2306.10013.pdf