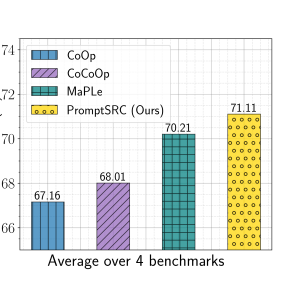

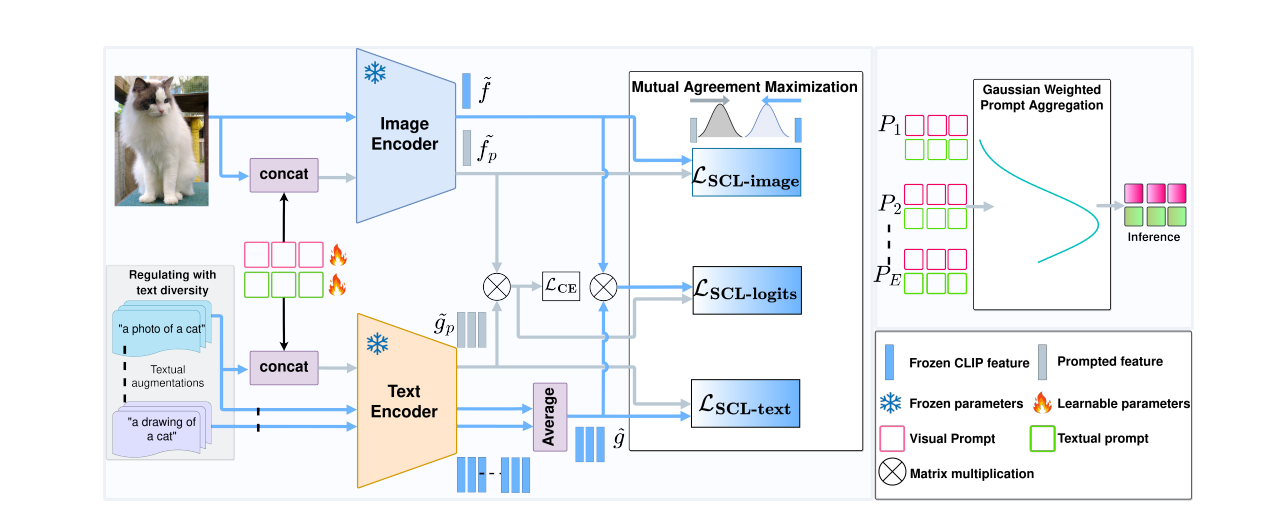

This research introduces a new framework named PromptSRC, aiming to improve the way prompts are used to fine-tune foundational models like CLIP for various tasks. The new approach aims to retain the model’s original generalization capability while improving task-specific performance, hence addressing prompt overfitting. It utilizes a three-pronged approach: regulating prompted representations with the frozen model, self-ensemble of prompts over the training trajectory, and textual diversity regulation.

Publication date: 13 Jul 2023

Project Page: Not provided

Paper: https://arxiv.org/pdf/2307.06948.pdf