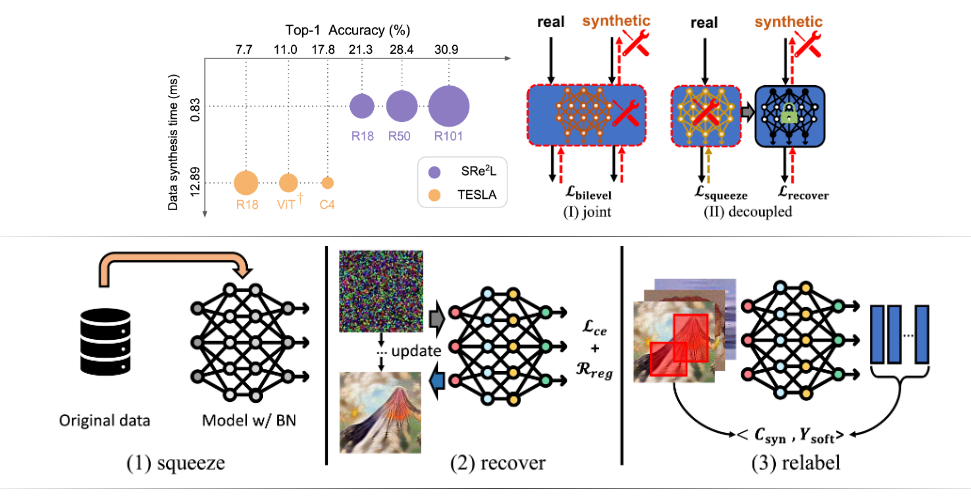

This paper introduces a new dataset condensation framework called Squeeze, Recover, and Relabel (SRe2L). The framework decouples the bilevel optimization of model and synthetic data during training, allowing it to handle varying scales of datasets, model architectures, and image resolutions effectively. The proposed method demonstrates flexibility across diverse dataset scales and exhibits multiple advantages in terms of arbitrary resolutions of synthesized images, low training cost, and memory consumption with high-resolution training. It also has the ability to scale up to arbitrary evaluation network architectures. Extensive experiments are conducted on Tiny-ImageNet and full ImageNet-1K datasets. The approach outperforms all previous state-of-the-art methods by significant margins.

Publication date: Not provided

Project Page: https://zeyuanyin.github.io/projects/SRe2L/

Paper: https://arxiv.org/pdf/2306.13092.pdf