The authors of this paper have developed a model named UrbanIR (Urban Scene Inverse Rendering) with the aim of creating realistic, free-viewpoint renderings of a scene under novel lighting conditions from a single video. The model concurrently infers shape, albedo, visibility, and sun and sky illumination from a single video of unbounded outdoor scenes with unknown lighting.

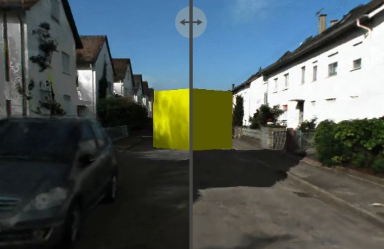

UrbanIR uses videos from cameras mounted on cars, which is different from many views of the same points in typical NeRF-style estimation. The resulting representations facilitate controllable editing, delivering photorealistic free-viewpoint renderings of relit scenes and inserted objects.

The authors have developed this model to address the challenge of inferring inverse graphics maps, which is ill-posed and prone to errors that can result in strong rendering artifacts. UrbanIR uses novel losses to control these and other sources of error. It also uses a novel loss to make very good estimates of shadow volumes in the original scene.

The model is capable of realistic rendering and can be used for applications such as changing the sun angle, day-to-night transitions, and object insertion.

Publication date: June 15, 2023

Project Page: https://urbaninverserendering.github.io/

Paper: https://arxiv.org/pdf/2306.09349.pdf