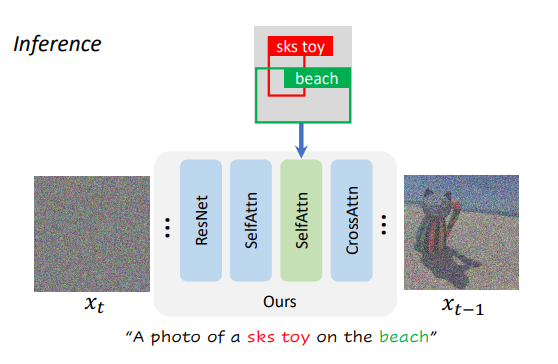

This paper presents PACGen, a model that can generate personalized objects with both high fidelity and localization controllability in novel contexts. The authors identify the entanglement issues in existing personalized generative models and propose a straightforward and efficient data augmentation training strategy that guides the diffusion model to focus solely on object identity. By inserting the plug-and-play adapter layers from a pre-trained controllable diffusion model, their model obtains the ability to control the location and size of each generated personalized object. During inference, they propose a regionally-guided sampling technique to maintain the quality and fidelity of the generated images. Their method achieves comparable or superior fidelity for personalized objects, yielding a robust, versatile, and controllable text-to-image diffusion model that is capable of generating realistic and personalized images.

Publication date: June 29, 2023

Project Page: https://yuheng-li.github.io/PACGen/

Paper: https://arxiv.org/pdf/2306.17154.pdf