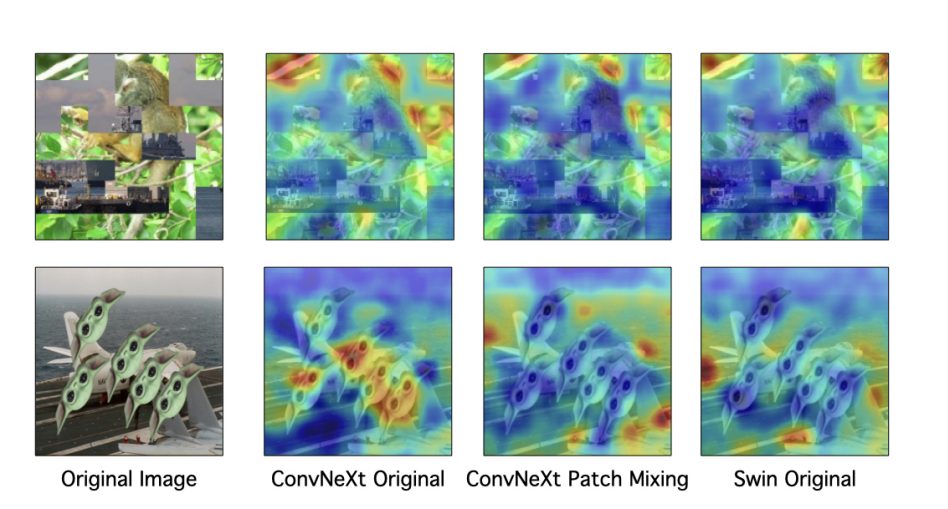

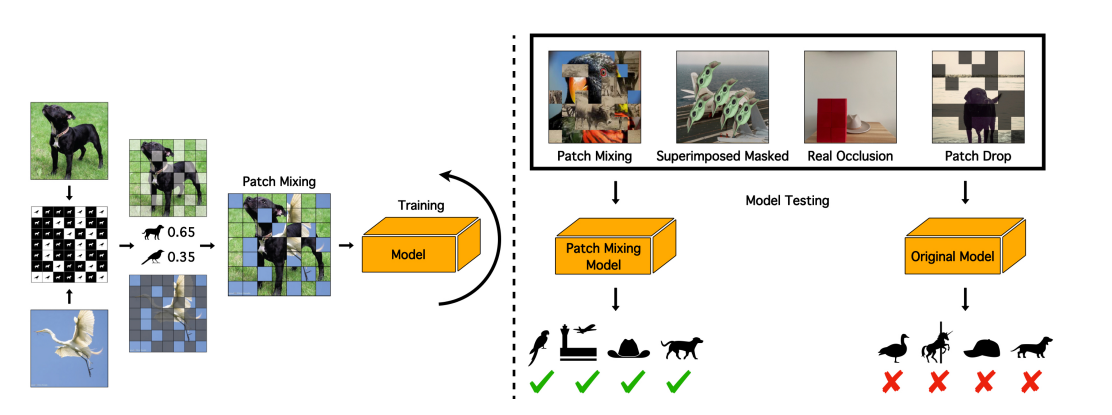

The paper “Hardwiring ViT Patch Selectivity into CNNs using Patch Mixing” investigates the unique property of Vision Transformers (ViTs) known as “patch selectivity”. This feature enables ViTs to effectively ignore out-of-context image information, which is hypothesized to allow them to handle occlusion more efficiently. The authors propose a method called “Patch Mixing” to imbue Convolutional Neural Networks (CNNs) with this ability. Patch Mixing involves inserting patches from one image onto another during training and interpolating labels between the two image classes.

The study finds that while ViTs naturally possess patch selectivity, CNNs can acquire this ability through the use of Patch Mixing. This leads to improved performance on occlusion benchmarks, suggesting that this training method effectively simulates in CNNs the abilities that ViTs already possess. The authors also introduce two new datasets for evaluating the performance of image classifiers under occlusion, further contributing to the field.

Publication date: June 30, 2023

Project Page: https://arielnlee.github.io/PatchMixing/

Paper: https://arxiv.org/pdf/2306.17848.pdf