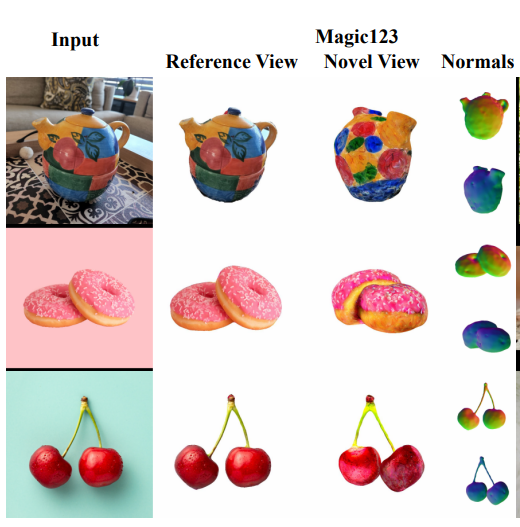

Magic123 is a groundbreaking approach developed to generate high-quality, textured 3D meshes from a single unposed image. The system employs both 2D and 3D priors in a two-stage coarse-to-fine process. In the first stage, a neural radiance field is optimized to produce a coarse geometry. In the second stage, a memory-efficient differentiable mesh representation is used to yield a high-resolution mesh with visually appealing texture. The 3D content is learned through reference view supervision and novel views guided by a combination of 2D and 3D diffusion priors.

The strength of the 2D and 3D priors can be adjusted to control the balance between exploration (more imaginative) and exploitation (more precise) of the generated geometry. This approach allows Magic123 to demonstrate a significant improvement over previous image-to-3D techniques, as validated through extensive experiments on synthetic benchmarks and diverse real-world images. The system is designed to encourage consistent appearances across views and to prevent degenerate solutions.

Publication date: June 30, 2023

Project Page: https://guochengqian.github.io/project/magic123

Paper: https://arxiv.org/abs/2306.17843