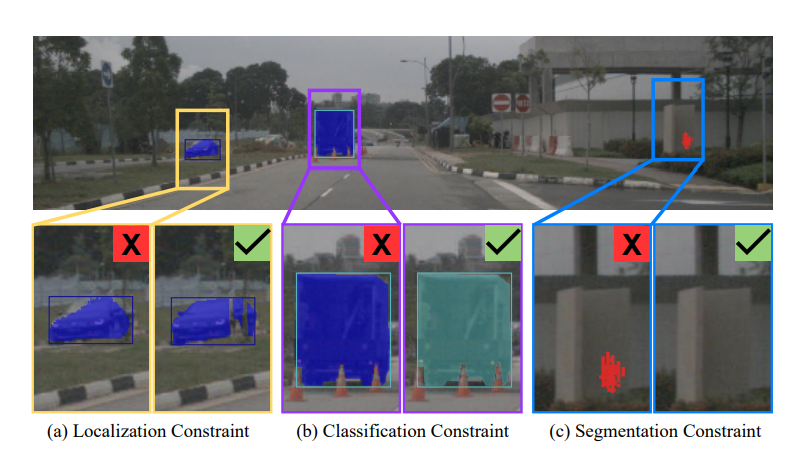

This paper presents a new multi-task active learning strategy for two interconnected vision tasks: object detection and semantic segmentation. The authors propose a method that leverages the inconsistency between these tasks to identify informative samples across both tasks. They introduce three constraints that define how the tasks are coupled and propose a method for determining the pixels belonging to the object detected by a bounding box, which is later used to quantify the constraints as inconsistency scores.

The authors conduct extensive experiments on the nuImages and A9 datasets, demonstrating that their approach outperforms existing state-of-the-art methods by up to 3.4% mDSQ on nuImages. Their approach achieves 95% of the fully-trained performance using only 67% of the available data, corresponding to 20% fewer labels compared to random selection and 5% fewer labels compared to state-of-the-art selection strategy.

Publication date: June 21, 2023

Project Page: N/A

Paper: https://arxiv.org/pdf/2306.12398.pdf