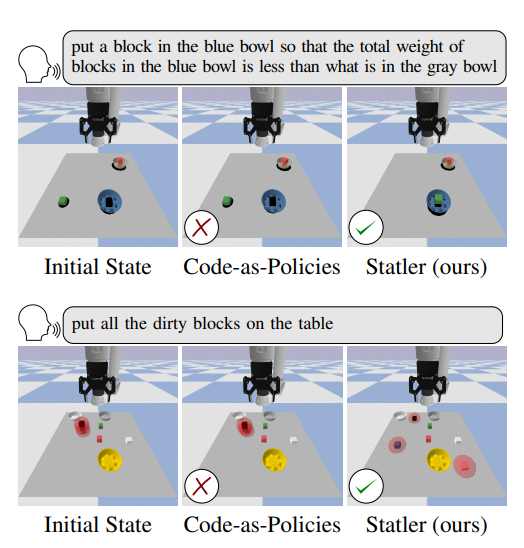

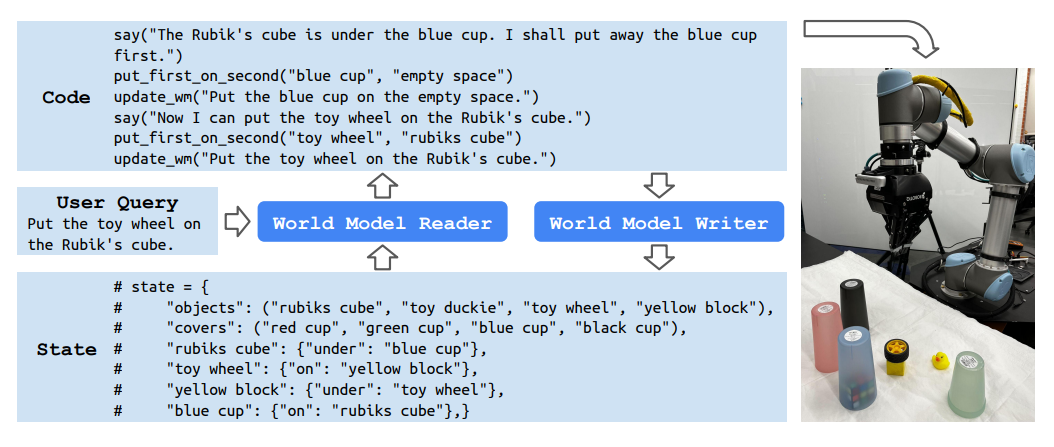

Statler is a novel framework designed to enhance the reasoning capabilities of large language models (LLMs) in the context of robotic tasks. The main challenge it addresses is the limited context window of contemporary LLMs, which makes reasoning over long time horizons difficult. Statler introduces an explicit representation of the world state as a form of “memory” that is maintained over time, improving the ability of existing LLMs to reason over longer time horizons without the constraint of context length. The framework uses two instances of general LLMs—a world-model reader and a world-model writer—that interface with and maintain the world state. The effectiveness of Statler has been evaluated on three simulated table-top manipulation domains and a real robot domain, showing significant improvements in LLM-based robot reasoning.

Publication date: July 3, 2023

Project Page: https://statler-lm.github.io/

Paper: Paper: https://arxiv.org/pdf/2306.17840.pdf