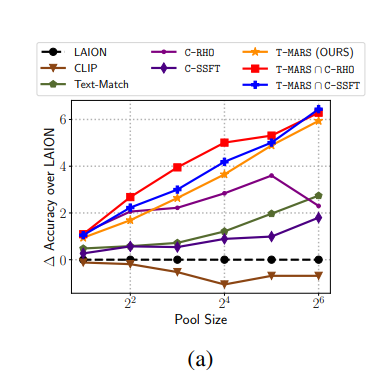

T-MARS is a new data filtering approach designed to improve visual representations in large-scale image-text datasets. The method is motivated by the observation that a significant portion of images in such datasets contain text that overlaps with the caption, which can lead to wasteful learning of optical character recognition rather than visual features. T-MARS circumvents this issue by filtering out image-caption pairs where the text dominates the visual features. The method first masks out the text in the images, then filters out those with a low similarity score between the masked image and the caption. This approach has proven effective, outperforming previous methods on various benchmarks and showing linear accuracy gains as data and compute are scaled exponentially.

Publication date: July 6, 2023

Project Page: https://github.com/locuslab/T-MARS

Paper: https://arxiv.org/abs/2307.03132