The researchers developed this paper with the aim of proposing a novel knowledge distillation framework for effectively teaching a sensorimotor student agent to drive under the supervision of a privileged teacher agent. They observed that current distillation methods for sensorimotor agents often result in suboptimal learned driving behavior by the student. This is hypothesized to be due to inherent differences between the input, modeling capacity, and optimization processes of the two agents.

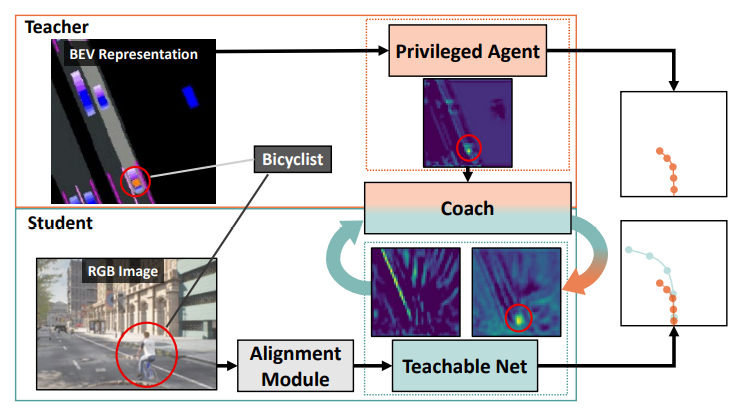

To address these limitations and close the gap between the sensorimotor agent and its privileged teacher, the researchers developed a distillation scheme that designs a student which learns to align their input features with the teacher’s privileged Bird’s Eye View (BEV) space. The student can then benefit from direct supervision by the teacher over the internal representation learning.

To scaffold the difficult sensorimotor learning task, the student model is optimized via a student-paced coaching mechanism with various auxiliary supervision. The researchers also propose a high-capacity imitation learned privileged agent that surpasses prior privileged agents in CARLA and ensures the student learns safe driving behavior.

Their proposed sensorimotor agent results in a robust image-based behavior cloning agent in CARLA, improving over current models by over 20.6% in driving score without requiring LiDAR, historical observations, ensemble of models, on-policy data aggregation or reinforcement learning.

Publication date: June 16, 2023

Paper: https://arxiv.org/pdf/2306.10014.pdf