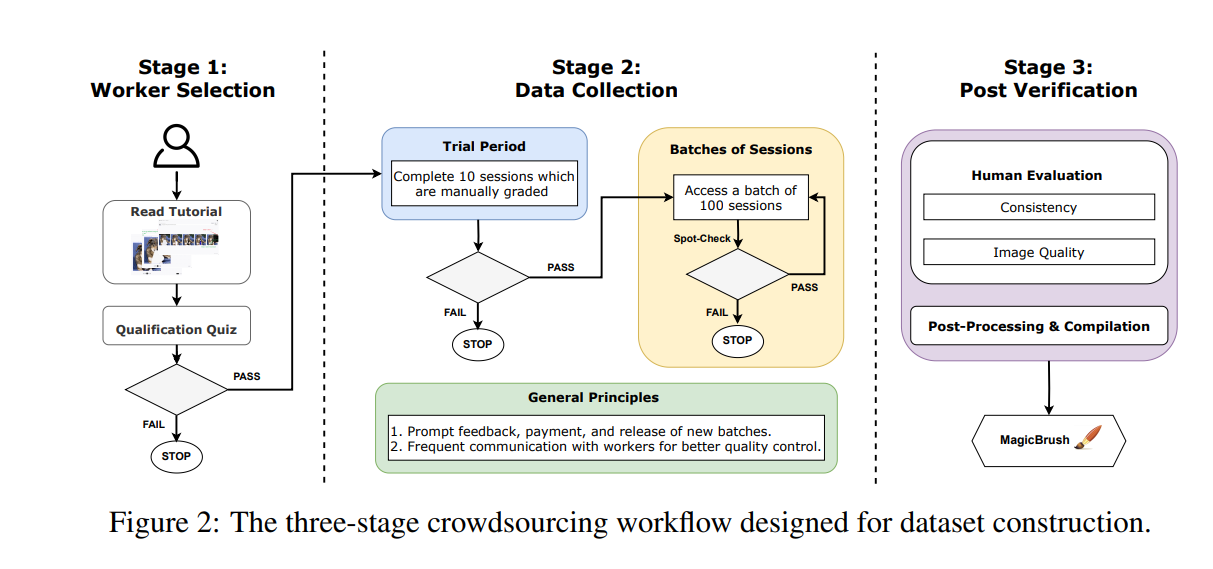

The article introduces MAGICBRUSH, a large-scale, manually annotated dataset for instruction-guided real image editing. The dataset was developed to address the limitations of existing methods for text-guided image editing, which are either zero-shot or trained on automatically synthesized datasets. These methods often require a lot of manual tuning to produce desirable outcomes in practice due to the high volume of noise in the synthesized datasets.

MAGICBRUSH comprises over 10,000 manually annotated triples (source image, instruction, target image), supporting both single-turn and multi-turn instruction-guided editing. The dataset covers diverse scenarios, including single-turn, multi-turn, mask-provided, and mask-free editing. The authors fine-tuned InstructPix2Pix on MAGICBRUSH and showed that the new model could produce much better images according to human evaluation. The results revealed the challenging nature of the dataset and the gap between current baselines and real-world editing needs.

Publication date: June 16, 2023

Project Page: https://osu-nlp-group.github.io/MagicBrush

Paper: https://arxiv.org/pdf/2306.10012.pdf