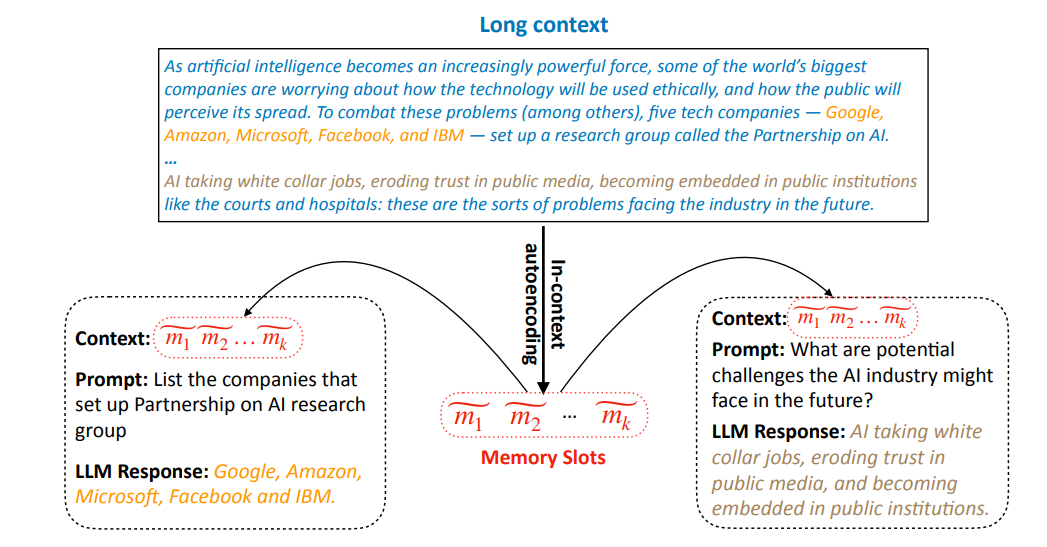

The team from Microsoft introduces In-context Autoencoder (ICAE), a novel method aimed at tackling the long context issue in large language models (LLMs). This approach seeks to compress lengthy contexts into a compact form, reducing computational requirements and memory overheads while maintaining high performance levels. Using a combination of pretraining and fine-tuning, the ICAE proves effective in creating compressed context representations that the LLM can leverage for a variety of purposes, demonstrating significant potential for more efficient LLM inference in real-world applications.

Publication date: 13 Jul 2023

Project Page: Not provided

Paper: https://arxiv.org/pdf/2307.06945.pdf